It seems that GPU (CUDA + cuDNN) works by running python of Anaconda environment on WSL (Windows Subsystem for Linux), so try it

Tried it

Create python virtual environment of Anaconda (Win) and run python of Anaconda environment on WSL and use GPU.

How to use (Usage)

Execution environment

- Windows10 (1903)

- NVIDIA GPU Computing Toolkit CUDA v10.1

- Anaconda3

- Linux Ubuntu 18.04 LTS (WSL)

Create python virtual environment of Anaconda

Launch Anaconda Prompt.

Anaconda Prompt (base) >

Create a python virtual environment of Anaconda and activate it.

“envname” is a virtual environment name.

(base) > conda create -n envname python=3.7 (base) > activate envname (envname) >

Run python of Anaconda environment on WSL

Launch WSL from Anaconda Prompt.

(envname) > wsl (WSL)$

Set alias so that python of Anaconda environment works.

(WSL)$ alias python="python.exe" (WSL)$ alias pip="pip.exe"

Run python and confirm that python of Anaconda environment works.

(WSL)$ python Python 3.7.5 ~ :: Anaconda, Inc. Type "help", "copyright", "credits" or "license" for more information. >>> exit() (WSL)$

If there is another Windows binary (exe) you want to run, set it to alias, and it seems good to set it to .bashrc appropriately.

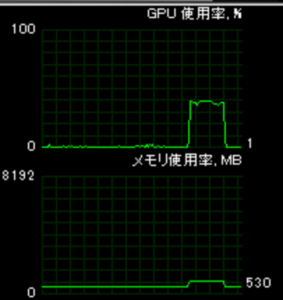

Check if GPU is used when running python

Try whether GPU works with NNabla (Neural Network Libraries).

NNabla is an AI (machine learning / neural network) framework provided by SONY.

(WSL)$ mkdir -p your/path/nnabla (WSL)$ cd your/path/nnabla (WSL)$ pip install nnabla-ext-cuda101 (WSL)$ git clone https://github.com/sony/nnabla-examples.git (WSL)$ cd nnabla-examples/mnist-collection/

When MNIST sample is executed with GPU (CUDA + cuDNN), it is completed in about 30 seconds.

(WSL)$ python classification.py -c cudnn

2019-12-23 02:33:32,107 [nnabla][INFO]: Initializing CPU extension...

2019-12-23 02:33:32,405 [nnabla][INFO]: Running in cudnn

2019-12-23 02:33:32,421 [nnabla][INFO]: Initializing CUDA extension...

2019-12-23 02:33:32,423 [nnabla][INFO]: Initializing cuDNN extension...

~~

2019-12-23 02:33:33,800 [nnabla][INFO]: Parameter save (.h5): tmp.monitor\params_000000.h5

2019-12-23 02:33:33,827 [nnabla][INFO]: iter=9 {Training loss}=2.210261583328247

2019-12-23 02:33:33,827 [nnabla][INFO]: iter=9 {Training error}=0.79453125

~~

2019-12-23 02:33:59,986 [nnabla][INFO]: iter=9999 {Training loss}=0.006541474722325802

2019-12-23 02:33:59,987 [nnabla][INFO]: iter=9999 {Training error}=0.003125

2019-12-23 02:33:59,987 [nnabla][INFO]: iter=9999 {Training time}=0.26130175590515137[sec/100iter] 26.61487102508545[sec]

2019-12-23 02:33:59,999 [nnabla][INFO]: iter=9999 {Test error}=0.00703125For comparison, it took about 7 minutes to run with CPU only.

(WSL)$ python classification.py

2019-12-23 02:37:54,749 [nnabla][INFO]: Initializing CPU extension...

2019-12-23 02:37:55,041 [nnabla][INFO]: Running in cpu

~~

2019-12-23 02:37:55,455 [nnabla][INFO]: Parameter save (.h5): tmp.monitor\params_000000.h5

2019-12-23 02:37:55,850 [nnabla][INFO]: iter=9 {Training loss}=2.210261344909668

2019-12-23 02:37:55,851 [nnabla][INFO]: iter=9 {Training error}=0.79453125

~~

2019-12-23 02:44:47,789 [nnabla][INFO]: iter=9999 {Training loss}=0.005216401536017656

2019-12-23 02:44:47,790 [nnabla][INFO]: iter=9999 {Training error}=0.00234375

2019-12-23 02:44:47,790 [nnabla][INFO]: iter=9999 {Training time}=4.1329545974731445[sec/100iter] 412.7419340610504[sec]

2019-12-23 02:44:47,978 [nnabla][INFO]: iter=9999 {Test error}=0.00859375It seems that i was able to run python of Anaconda environment on WSL and use GPU (CUDA + cuDNN).

There is a problem with pip and conda, but since it worked on the GPU for the time being, let’s ok.

いいね?(0)

いいね?(0)